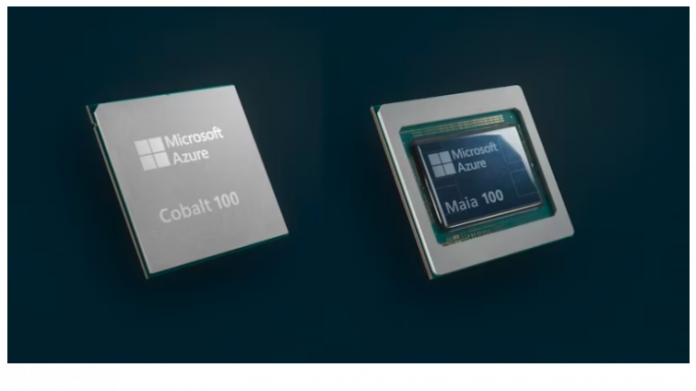

On November 15, Microsoft announced a pair of custom AI chips, Azure Maia 100 and Azure Cobalt 100 CPU, to accelerate its in-house AI computing tasks.

The Mia 100 chip is intended to run large language models and train AI models, whereas Cobalt, a custom Arm-based CPU, is intended to handle general computing workloads.

“We are reimagining every aspect of our data centers to meet the needs of our customers, and we are building the infrastructure to support AI innovation,” said Scott Guthrie, executive vice president of Microsoft’s Cloud + AI Group.

According to Microsoft, the Maia 100 is built on a 5 nm architecture designed specifically for the Azure hardware stack. The AI accelerator chip, according to the company, will power some of the largest internal AI workloads running on Microsoft Azure cloud computing service, such as Bing, Microsoft 365, and the OpenAI service.

The Cobalt 100 chipset has 128 cores and is based on an Arm Neoverse CSS architecture, according to Microsoft, for “delivering greater efficiency and performance in cloud native offerings.”

Both custom chipsets will be made available to Azure data centers early next year. The chips will not be sold by the American tech giant, but will be used internally to power its in-house AI services.

Microsoft plans to add the NVIDIA H200 Tensor Core GPU to its Azure fleet next year, in addition to its own custom-based AI chips, to support larger language models. The company has collaborated with NVIDIA to train mid-sized generation AI models on NVIDIA H100 Tensor Core GPUs.

Given Below are Some Adaptive Features of ChatGPT:- |